How does AI perceive emotions?

This was what our team set out to explore in Coded Comfort.

In recent times, a new wave of Artificial Intelligence (AI) has emerged, designed to mimic human behaviour and reciprocate gestures of affection. We now live in an era where people actually turn to conversational bots as their companions, sometimes preferring them over human comfort. We acknowledge that human interactions are sometimes messy. We say things we don’t mean, and our attempts at providing comfort can be hurtful. In contrast, AI has made strides at mimicking human conversations, understanding how to offer comfort in various specific situations. In an era where loneliness affects many, the question arises: can AI truly provide comfort and companionship to those feeling isolated? To what extent does AI know about comfort?

Although AI, no matter how sophisticated, lacks genuine human empathy. While it can simulate understanding and provide predefined responses, it doesn't truly comprehend emotions. Individuals might develop dependency or even addiction to AI companions, hindering their ability to form genuine relationships. The constant availability and lack of boundaries in AI interactions can lead to unhealthy attachment patterns.

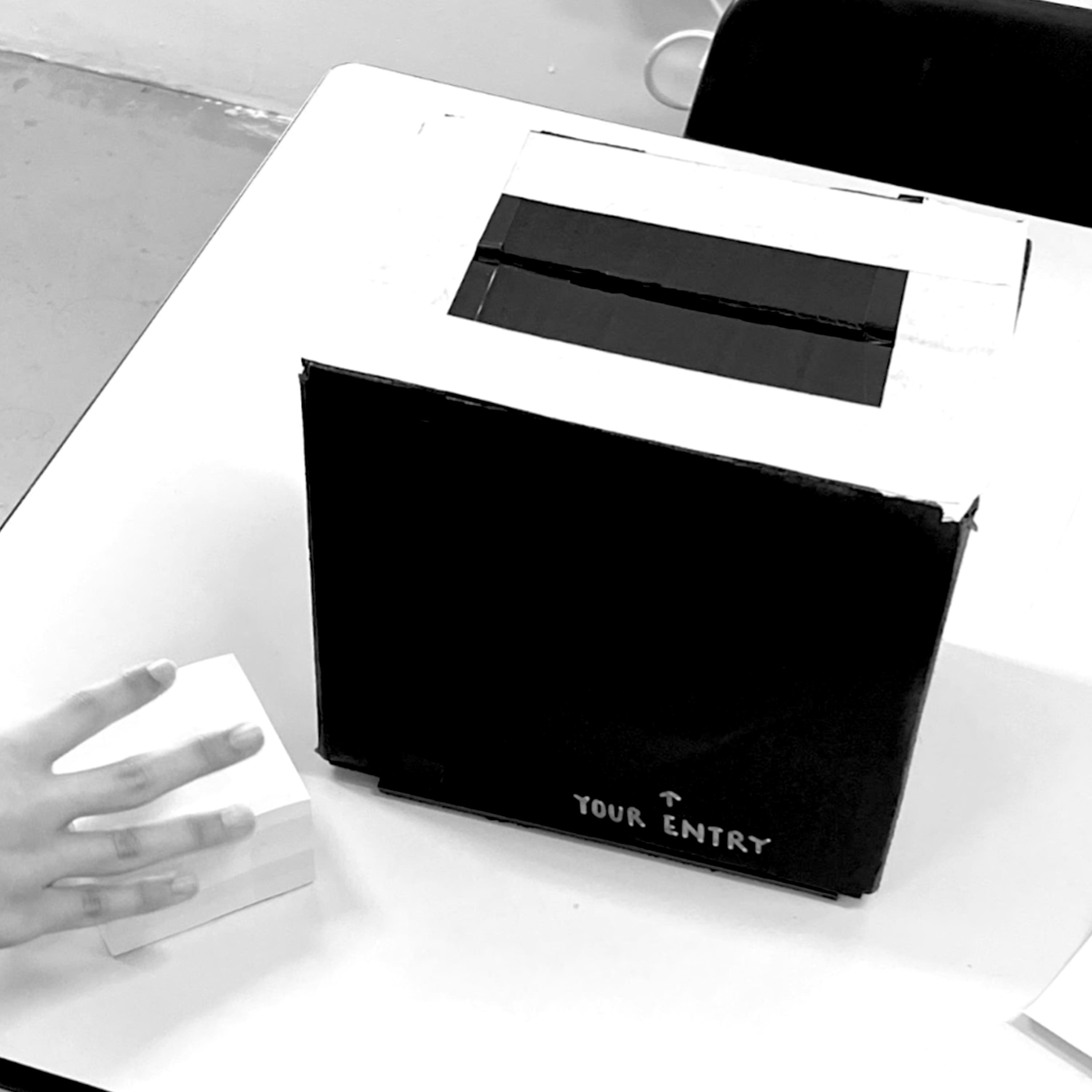

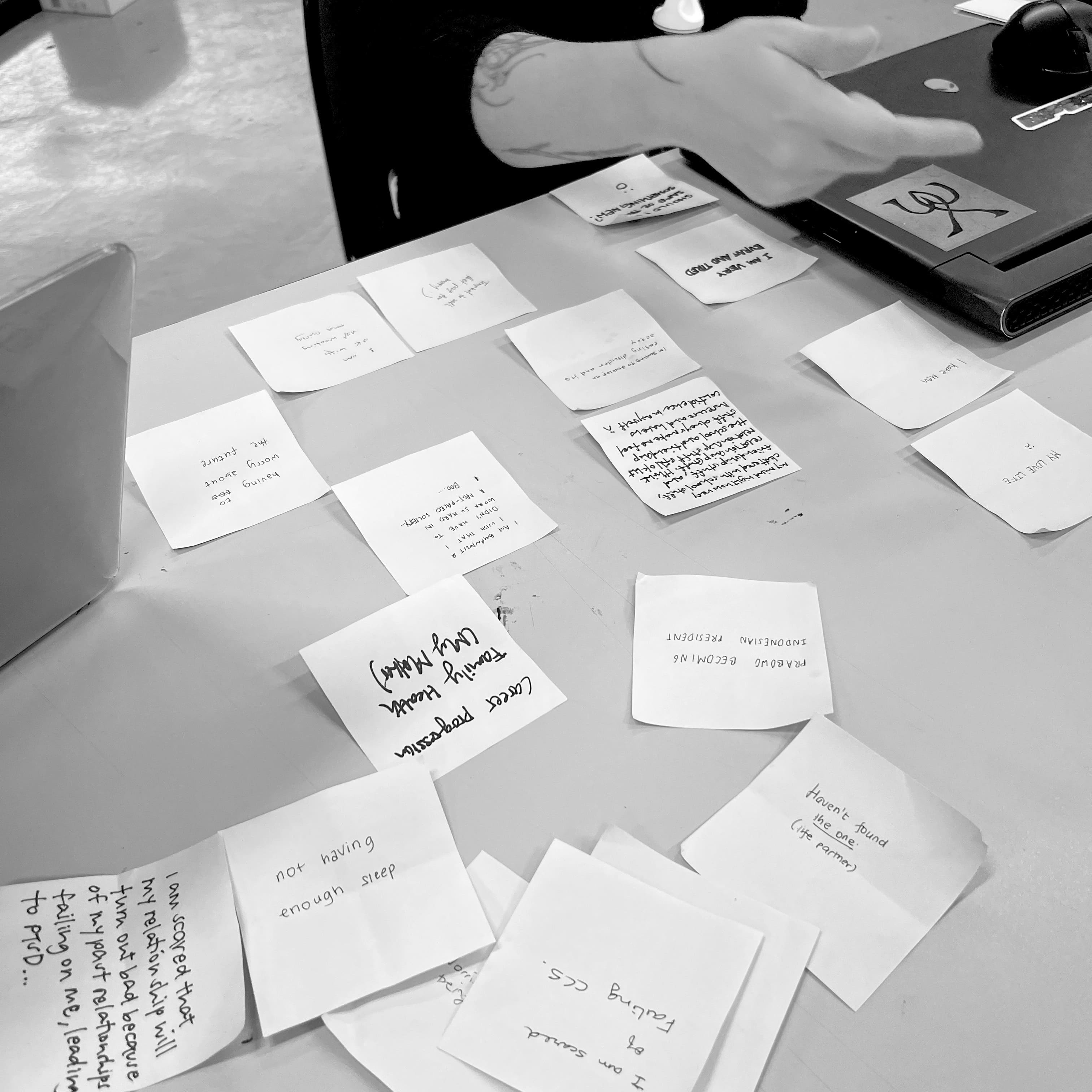

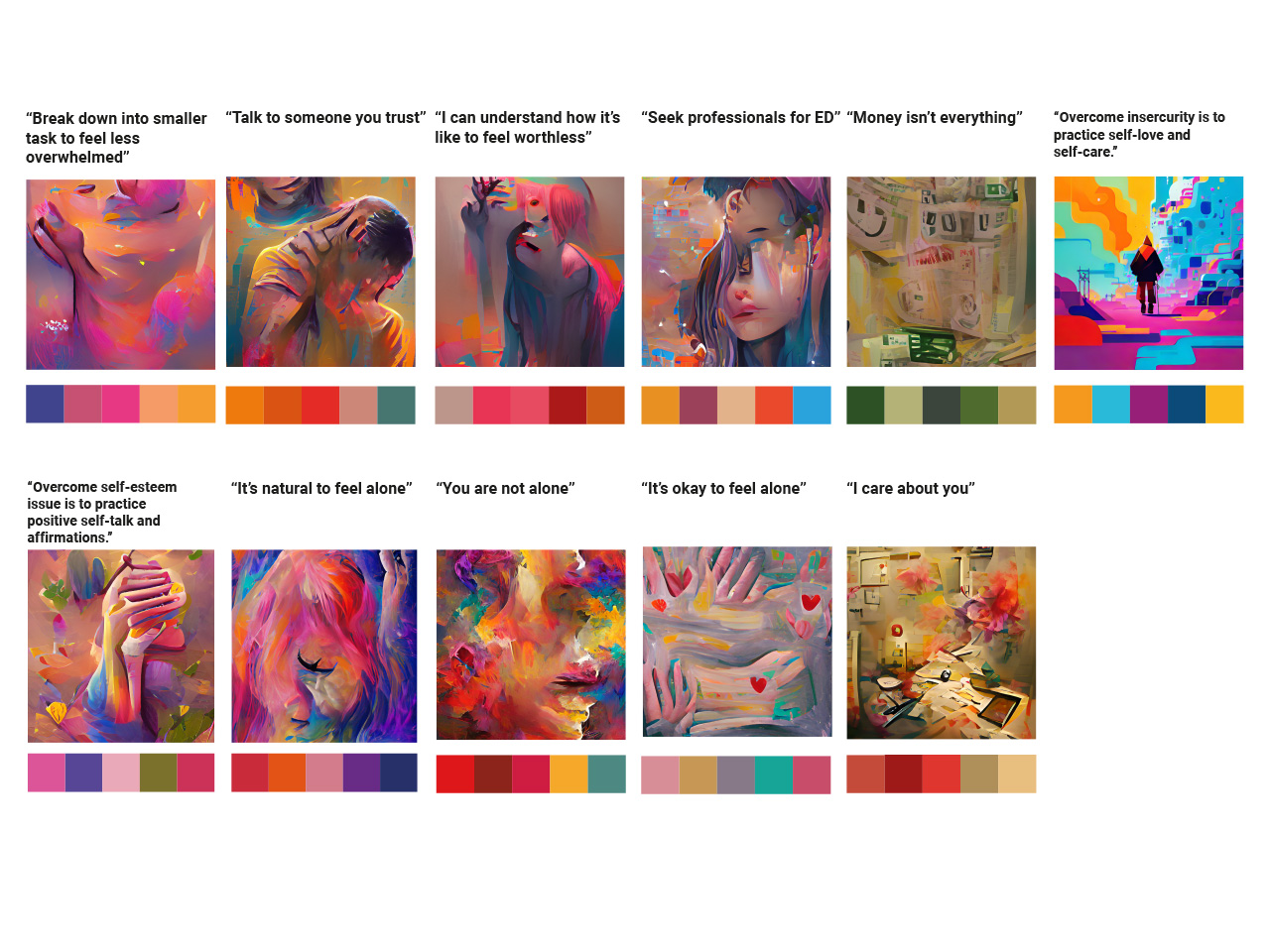

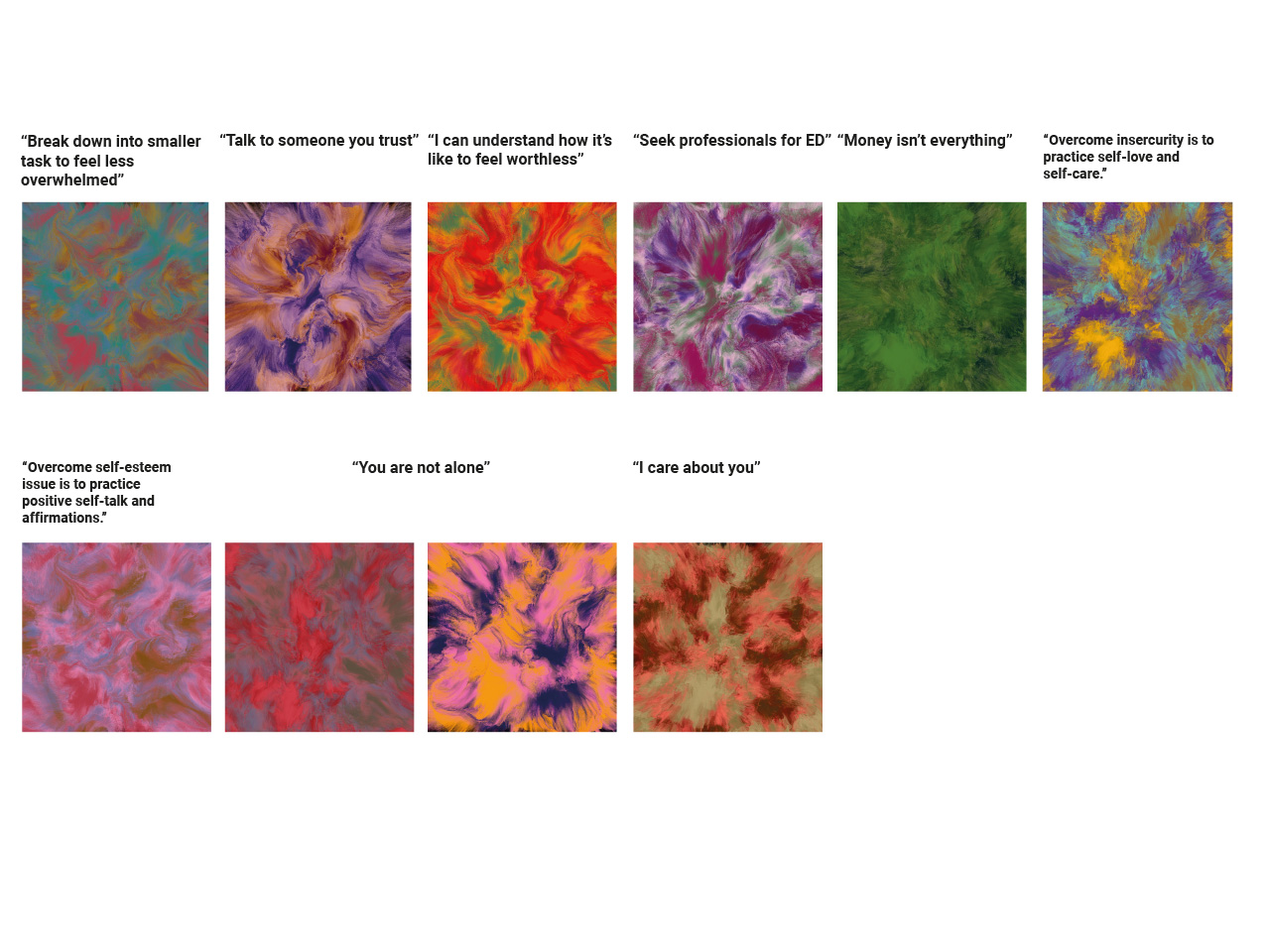

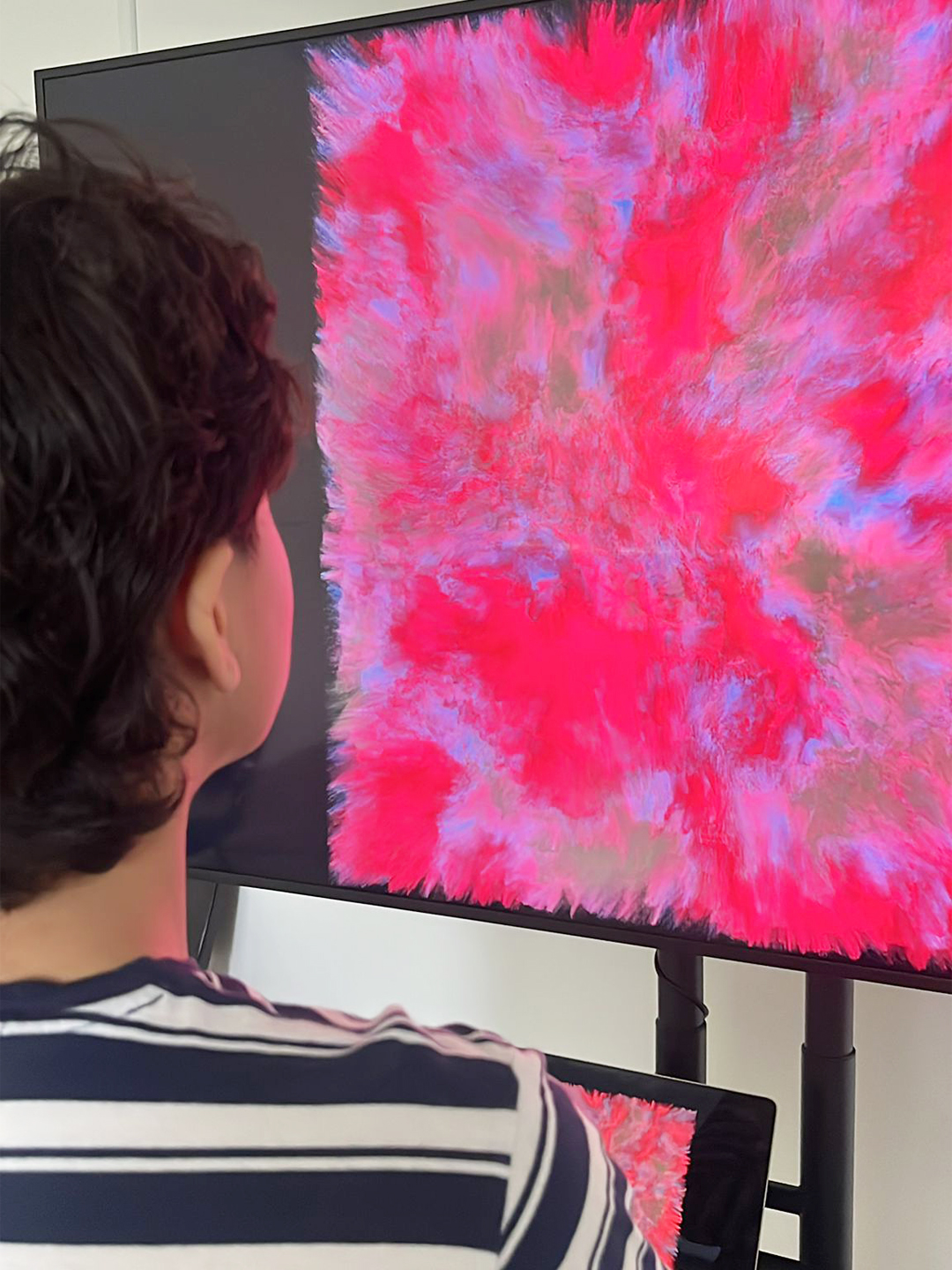

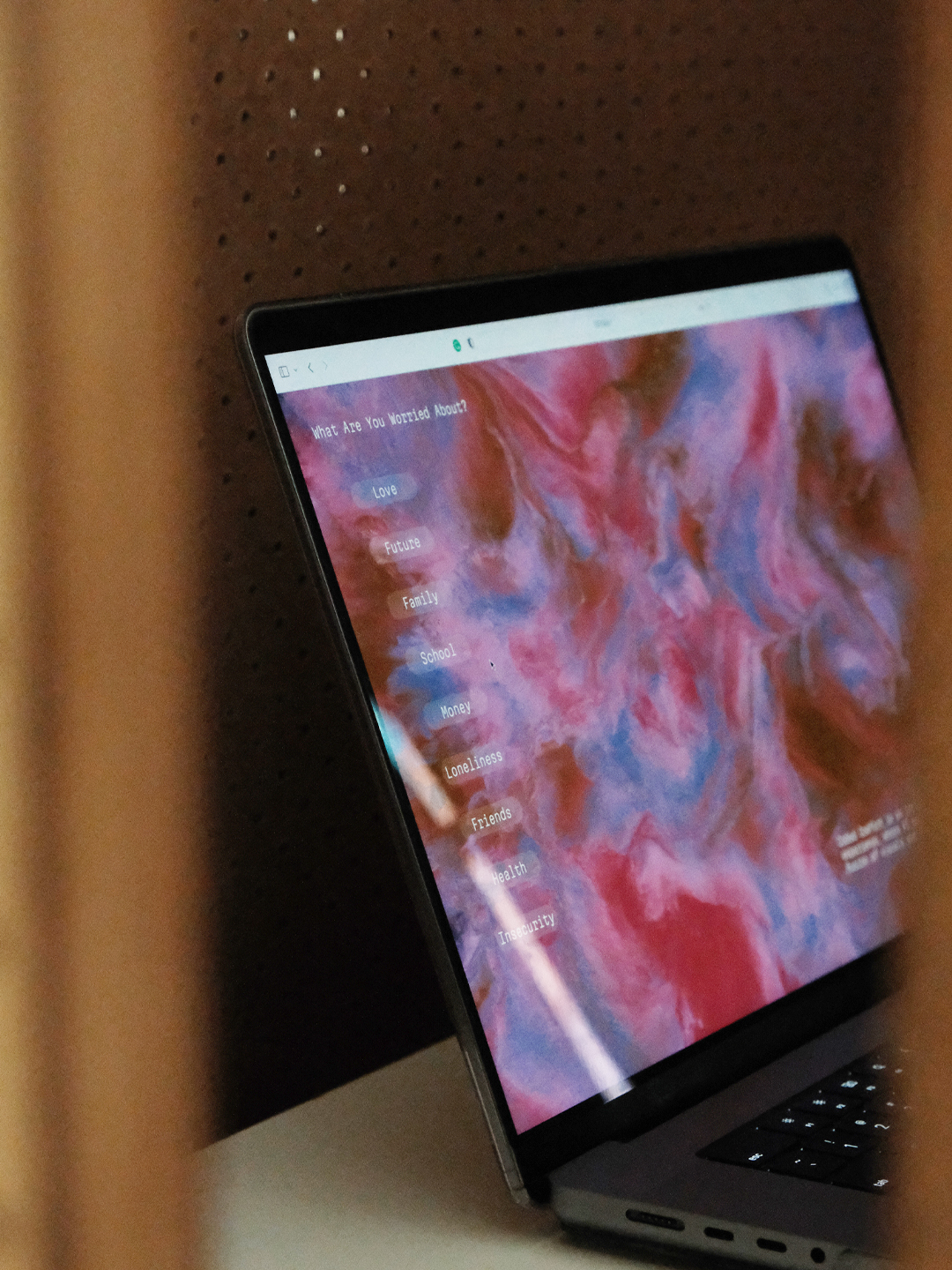

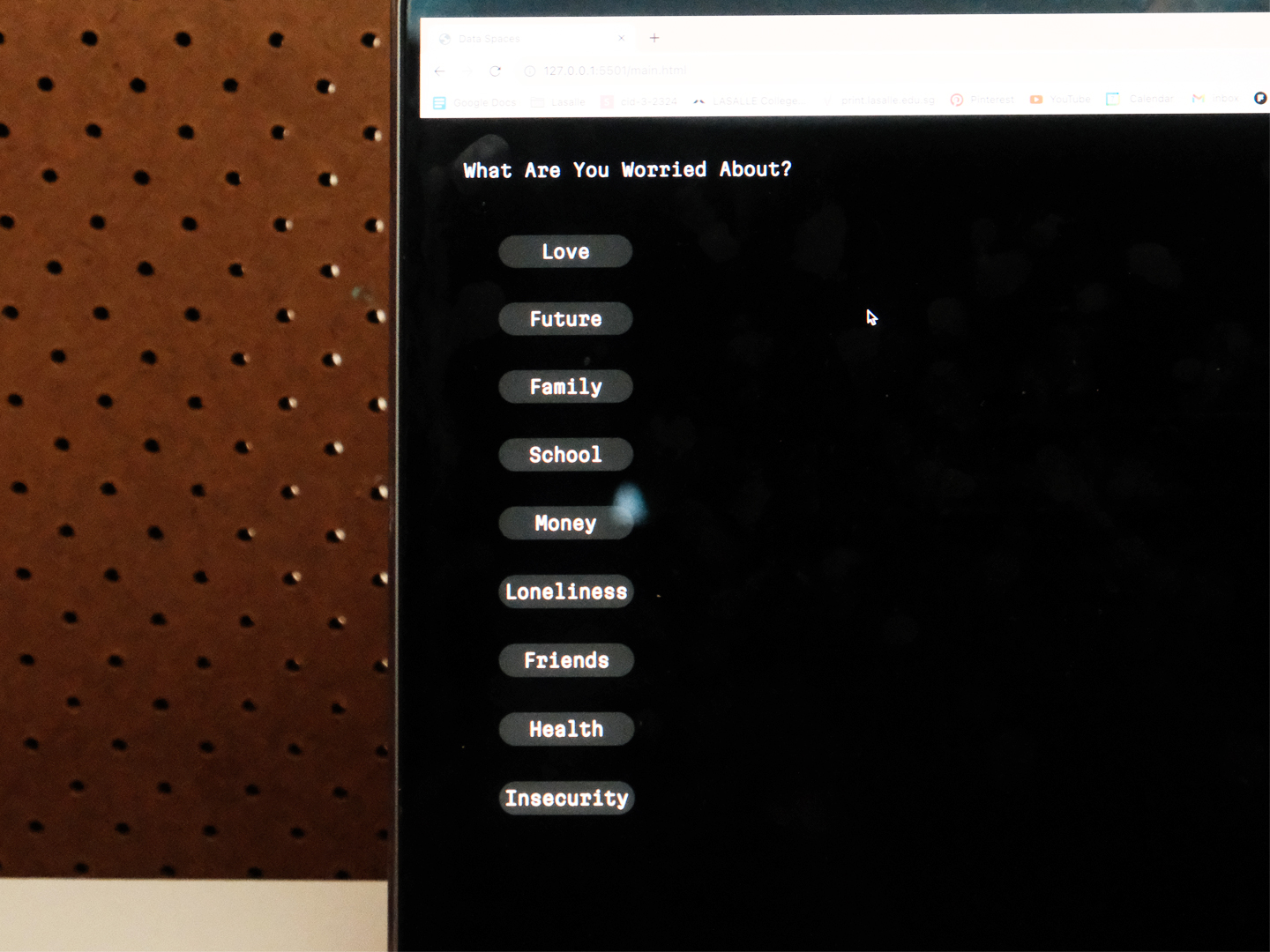

Through informal research into common human worries, we explored the role of AI in emotional support. Using generative visuals and machine interpretations of emotions, we created a space where people might feel less alone—offering a speculative lens on how comfort could evolve in the age of artificial companionship.

View microsite here ↗

Team members

Jade Min, Saanvi, Kai Siang, and Yumi, Mentored by Andreas Schlegel

In recent times, a new wave of Artificial Intelligence (AI) has emerged, designed to mimic human behaviour and reciprocate gestures of affection. We now live in an era where people actually turn to conversational bots as their companions, sometimes preferring them over human comfort. We acknowledge that human interactions are sometimes messy. We say things we don’t mean, and our attempts at providing comfort can be hurtful. In contrast, AI has made strides at mimicking human conversations, understanding how to offer comfort in various specific situations. In an era where loneliness affects many, the question arises: can AI truly provide comfort and companionship to those feeling isolated? To what extent does AI know about comfort?

Although AI, no matter how sophisticated, lacks genuine human empathy. While it can simulate understanding and provide predefined responses, it doesn't truly comprehend emotions. Individuals might develop dependency or even addiction to AI companions, hindering their ability to form genuine relationships. The constant availability and lack of boundaries in AI interactions can lead to unhealthy attachment patterns.

Through informal research into common human worries, we explored the role of AI in emotional support. Using generative visuals and machine interpretations of emotions, we created a space where people might feel less alone—offering a speculative lens on how comfort could evolve in the age of artificial companionship.

View microsite here ↗

Team members

Jade Min, Saanvi, Kai Siang, and Yumi, Mentored by Andreas Schlegel